Systems Engineering and Electronics ›› 2022, Vol. 44 ›› Issue (1): 199-208.doi: 10.12305/j.issn.1001-506X.2022.01.25

• Systems Engineering • Previous Articles Next Articles

Scheduling strategies research based on reinforcement learning for wartime support force

Bin ZENG1, Rui WANG2,*, Houpu LI3, Xu FAN1

- 1. Department of Management and Economics, Naval University of Engineering, Wuhan 430033, China

2. Teaching and Research Support Center, Naval University of Engineering, Wuhan 430033, China

3. Department of Navigation Engineering, Naval University of Engineering, Wuhan 430033, China

-

Received:2020-11-28Online:2022-01-01Published:2022-01-19 -

Contact:Rui WANG

CLC Number:

Cite this article

Bin ZENG, Rui WANG, Houpu LI, Xu FAN. Scheduling strategies research based on reinforcement learning for wartime support force[J]. Systems Engineering and Electronics, 2022, 44(1): 199-208.

share this article

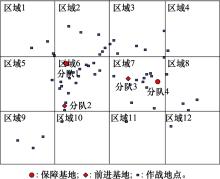

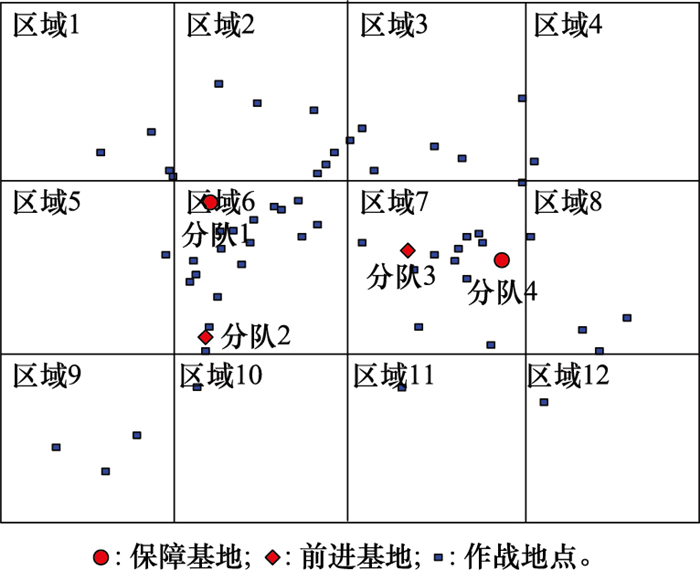

Table 1

Guaranteed application probability according to zone-priority"

| 作战区域 | 优先级 | ||

| 紧急 | 重要 | 一般 | |

| 1 | 0.008 0 | 0.008 0 | 0.034 1 |

| 2 | 0.018 2 | 0.018 2 | 0.077 3 |

| 3 | 0.015 0 | 0.015 0 | 0.063 9 |

| 4 | 0.002 7 | 0.002 7 | 0.011 4 |

| 5 | 0.004 6 | 0.004 6 | 0.019 3 |

| 6 | 0.049 6 | 0.049 6 | 0.210 9 |

| 7 | 0.034 8 | 0.034 8 | 0.148 0 |

| 8 | 0.009 5 | 0.009 5 | 0.040 4 |

| 9 | 0.008 7 | 0.008 7 | 0.037 0 |

| 10 | 0.004 6 | 0.004 6 | 0.019 5 |

| 11 | 0.002 8 | 0.002 8 | 0.011 9 |

| 12 | 0.001 5 | 0.001 5 | 0.006 0 |

Table 2

Mean transport time of support detachment to each region min"

| 作战区域 | 保障分队 | |||

| 分队1 | 分队2 | 分队3 | 分队4 | |

| 1 | 51.689 | 66.657 | 73.665 | 83.989 |

| 2 | 58.997 | 73.966 | 65.113 | 73.639 |

| 3 | 73.381 | 83.702 | 63.339 | 66.146 |

| 4 | 83.129 | 90.959 | 66.423 | 62.208 |

| 5 | 45.475 | 52.612 | 65.170 | 75.718 |

| 6 | 48.728 | 52.504 | 58.221 | 68.596 |

| 7 | 66.371 | 67.606 | 45.847 | 45.999 |

| 8 | 86.781 | 86.022 | 64.537 | 55.094 |

| 9 | 100.590 | 84.631 | 108.610 | 116.710 |

| 10 | 75.851 | 58.163 | 81.231 | 89.782 |

| 11 | 77.564 | 69.977 | 62.538 | 64.677 |

| 12 | 94.617 | 90.921 | 72.327 | 63.278 |

| 1 |

XUE B , TONG N N . DIOD: fast and efficient weakly semi-supervised deep complex ISAR object detection[J]. IEEE Trans.on Cybernetics, 2019, 49 (11): 3991- 4003.

doi: 10.1109/TCYB.2018.2856821 |

| 2 |

XUE B , TONG N N . Real-world ISAR object recognition using deep multimodal relation learning[J]. IEEE Trans.on Cybernetics, 2020, 50 (10): 4256- 4267.

doi: 10.1109/TCYB.2019.2933224 |

| 3 |

昝翔, 陈春良, 张仕新, 等. 多约束条件下战时装备维修任务分配方法[J]. 兵工学报, 2017, 38 (8): 1603- 1609.

doi: 10.3969/j.issn.1000-1093.2017.08.019 |

|

ZAN X , CHEN C L , ZHANG S X , et al. Task allocation method for wartime equipment maintenance under multiple constraint conditions[J]. Acta Armamentarii, 2017, 38 (8): 1603- 1609.

doi: 10.3969/j.issn.1000-1093.2017.08.019 |

|

| 4 | 何岩, 赵劲松, 王少聪, 等. 基于维修优先级的战时装备维修保障力量优化调度[J]. 军事交通学院学报, 2019, 21 (5): 42- 46. |

| HE Y , ZHAO J S , WANG S C , et al. Maintenance priority-based optimization and scheduling of equipment maintenance support strength in wartime[J]. Journal of Military Transportation University, 2019, 21 (5): 42- 46. | |

| 5 | 曾斌, 姚路, 胡炜, 等. 考虑不确定因素影响的保障任务调度算法[J]. 系统工程与电子技术, 2016, 38 (3): 595- 601. |

| ZENG B , YAO L , HU W , et al. Scheduling algorithm for maintenance tasks under uncertainty[J]. Systems Engineering and Electronics, 2016, 38 (3): 595- 601. | |

| 6 |

刘彦, 陈春良, 昝翔, 等. 复杂约束条件下伴随修理任务多目标动态调度[J]. 兵工学报, 2019, 40 (3): 621- 628.

doi: 10.3969/j.issn.1000-1093.2019.03.022 |

|

LIU Y , CHEN C L , ZAN X , et al. Multi-objective dynamic scheduling with accompanying repair tasks under complex constraints[J]. Acta Armamentarii, 2019, 40 (3): 621- 628.

doi: 10.3969/j.issn.1000-1093.2019.03.022 |

|

| 7 |

任帆, 吕学志, 王宪文, 等. 巡回修理中的维修任务调度策略[J]. 火力与指挥控制, 2013, 38 (12): 171- 175.

doi: 10.3969/j.issn.1002-0640.2013.12.045 |

|

REN F , LYU X Z , WANG X W , et al. Research on maintenance task scheduling strategies in contact repairing[J]. Fire Control & Command Control, 2013, 38 (12): 171- 175.

doi: 10.3969/j.issn.1002-0640.2013.12.045 |

|

| 8 | DAVIS L T , BEERY P , PAULO E . Investigation of integration and potential conflicts for distributed maritime operations and integrated air and missile defense[J]. Naval Engineers Journal, 2020, 132 (1): 83- 95. |

| 9 | DAY W G , COOPER E , PHUNG K , et al. Prolonged stabilization during a mass casualty incident at sea in the era of distributed maritime operations[J]. Military medicine, 2020, 185 (11): 2192- 2197. |

| 10 | 令狐昌应, 王少聪, 李文羚, 等. 战时装备维修保障方案生成与优化需求分析[J]. 军事交通学院学报, 2020, 22 (1): 24- 28. |

| LINGHU C Y , WANG S C , LI W L , et al. Analysis on generation and optimization of equipment maintenance support plan in wartime[J]. Journal of Military Transportation University, 2020, 22 (1): 24- 28. | |

| 11 | JI S G , ZHENG Y , WANG Z Y , et al. A deep reinforcement learning-enabled dynamic redeployment system for mobile ambulances[J]. Proc.of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2019, 3 (1): 1- 20. |

| 12 |

HAMASHA M M , RUMBE G . Determining optimal policy for emergency department using Markov decision process[J]. World Journal of Engineering, 2017, 14 (5): 467- 472.

doi: 10.1108/WJE-12-2016-0148 |

| 13 |

NI Y , WANG K , ZHAO L D . A Markov decision process model of allocating emergency medical resource among multi-priority injuries[J]. International Journal of Mathematics in Operational Research, 2017, 10 (1): 1- 17.

doi: 10.1504/IJMOR.2017.080738 |

| 14 | JOO S H, OGAWA Y, SEKIMOTO Y. Decision-Making system for road-recovery considering human mobility by applying deep q-network[C]//Proc. of the IEEE International Conference on Big Data, 2019: 4075-4084. |

| 15 | WAGNER J, ROOPAEI M. Edge based decision making in disaster response systems[C]//Proc. of the 10th Annual Computing and Communication Workshop and Conference, 2020: 469-473. |

| 16 |

KENEALLY S K , ROBBINS M J , LUNDAY B J . A Markov decision process model for the optimal dispatch of military medical evacuation assets[J]. Health Care Management Science, 2016, 19 (2): 111- 129.

doi: 10.1007/s10729-014-9297-8 |

| 17 |

HUANG Q , HUANG R H , HAO W , et al. Adaptive power system emergency control using deep reinforcement learning[J]. IEEE Trans.on Smart Grid, 2020, 11 (2): 1171- 1182.

doi: 10.1109/TSG.2019.2933191 |

| 18 |

BAI W W , LI T S , TONG S C . NN reinforcement learning adaptive control for a class of nonstrict-feedback discrete-time systems[J]. IEEE Trans.on Cybernetics, 2020, 50 (11): 4573- 4584.

doi: 10.1109/TCYB.2020.2963849 |

| 19 | KLINK P, ABDULSAMAD H, BELOUSOV B, et al. Self-paced contextual reinforcement learning[C]//Proc. of the Conference on Robot Learning, 2020: 513-529. |

| 20 | WANG C , JU P , LEI S B , et al. Markov decision process-based resilience enhancement for distribution systems: an approximate dynamic programming approach[J]. IEEE Trans.on Smart Grid, 2019, 11 (3): 2498- 2510. |

| 21 |

WANG C , LEI S B , JU P , et al. MDP-based distribution network reconfiguration with renewable distributed generation: approximate dynamic programming approach[J]. IEEE Trans.on Smart Grid, 2020, 11 (4): 3620- 3631.

doi: 10.1109/TSG.2019.2963696 |

| 22 | YU X , SHEN S Q . An integrated decomposition and approximate dynamic programming approach for on-demand ride pooling[J]. IEEE Trans.on Intelligent Transportation Systems, 2019, 21 (9): 3811- 3820. |

| 23 |

DORNHEIM J , LINK N , GUMBSCH P . Model-free adaptive optimal control of episodic fixed-horizon manufacturing processes using reinforcement learning. international journal of control[J]. Automation and Systems, 2020, 18 (6): 1593- 604.

doi: 10.1007/s12555-019-0120-7 |

| 24 |

ULMER M W , GOODSON J C , MATTFELD D C , et al. Offline-online approximate dynamic programming for dynamic vehicle routing with stochastic requests[J]. Transportation Science, 2019, 53 (1): 185- 202.

doi: 10.1287/trsc.2017.0767 |

| 25 |

LEI L , XU H J , XIONG X , et al. Joint computation offloading and multiuser scheduling using approximate dynamic programming in NB-IoT edge computing system[J]. IEEE Internet of Things Journal, 2019, 6 (3): 5345- 5362.

doi: 10.1109/JIOT.2019.2900550 |

| 26 | DAI J G , SHI P . Inpatient overflow: an approximate dynamic programming approach[J]. Manufacturing & Service Operations Management, 2019, 21 (4): 894- 911. |

| 27 |

BIKKER I A , MES M R K , SAURÉ A , et al. Online capacity planning for rehabilitation treatments: an approximate dynamic programming approach[J]. Probability in the Engineering and Informational Sciences, 2020, 34 (3): 381- 405.

doi: 10.1017/S0269964818000402 |

| 28 | BHANDARI J, RUSSO D, SINGAL R. A finite time analysis of temporal difference learning with linear function approximation[C]//Proc. of the Conference on Learning Theory. 2018: 1691-1692. |

| 29 |

XIE W J , DENG X W . Scalable algorithms for the sparse ridge regression[J]. SIAM Journal on Optimization, 2020, 30 (4): 3359- 3386.

doi: 10.1137/19M1245414 |

| 30 | DOS SANTOS MIGNON A , DA ROCHA R L A . An adaptive implementation of ε-greedy in reinforcement learning[J]. Procedia Computer Science, 2017, 109 (2): 1146- 1151. |

| [1] | Bakun ZHU, Weigang ZHU, Wei LI, Ying YANG, Tianhao GAO. Research on decision-making modeling of cognitive jamming for multi-functional radar based on Markov [J]. Systems Engineering and Electronics, 2022, 44(8): 2488-2497. |

| [2] | Guan WANG, Haizhong RU, Dali ZHANG, Guangcheng MA, Hongwei XIA. Design of intelligent control system for flexible hypersonic vehicle [J]. Systems Engineering and Electronics, 2022, 44(7): 2276-2285. |

| [3] | Lingyu MENG, Bingli GUO, Wen YANG, Xinwei ZHANG, Zuoqing ZHAO, Shanguo HUANG. Network routing optimization approach based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(7): 2311-2318. |

| [4] | Dongzi GUO, Rong HUANG, Hechuan XU, Liwei SUN, Naigang CUI. Research on deep deterministic policy gradient guidance method for reentry vehicle [J]. Systems Engineering and Electronics, 2022, 44(6): 1942-1949. |

| [5] | Mingren HAN, Yufeng WANG. Optimization method for orbit transfer of all-electric propulsion satellite based on reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(5): 1652-1661. |

| [6] | Li HE, Liang SHEN, Hui LI, Zhuang WANG, Wenquan TANG. Survey on policy reuse in reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(3): 884-899. |

| [7] | Han YANG, Haowei WANG, Qingrong LI, Min CHEN, Bo PENG. Application research of creep life model based on belief reliability theory [J]. Systems Engineering and Electronics, 2022, 44(3): 1044-1051. |

| [8] | Xuan WANG, Peng DI, Dongliang YIN. Conflict evidence fusion method based on Lance distance and credibility entropy [J]. Systems Engineering and Electronics, 2022, 44(2): 592-602. |

| [9] | Bakun ZHU, Weigang ZHU, Wei LI, Ying YANG, Tianhao GAO. Multi-function radar intelligent jamming decision method based on prior knowledge [J]. Systems Engineering and Electronics, 2022, 44(12): 3685-3695. |

| [10] | Qingqing YANG, Yingying GAO, Yu GUO, Boyuan XIA, Kewei YANG. Target search path planning for naval battle field based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(11): 3486-3495. |

| [11] | Bin ZENG, Hongqiang ZHANG, Houpu LI. Research on anti-submarine strategy for unmanned undersea vehicles [J]. Systems Engineering and Electronics, 2022, 44(10): 3174-3181. |

| [12] | Qitian WAN, Baogang LU, Yaxin ZHAO, Qiuqiu WEN. Autopilot parameter rapid tuning method based on deep reinforcement learning [J]. Systems Engineering and Electronics, 2022, 44(10): 3190-3199. |

| [13] | Wei HAN, Kaikai CUI, Jie LIU, Xinwei WANG, Yong ZHANG. Carrier landing control technology based on self-tuning MPC [J]. Systems Engineering and Electronics, 2022, 44(1): 250-261. |

| [14] | Zhiwei JIANG, Yang HUANG, Qihui WU. Anti-interference frequency allocation based on kernel reinforcement learning [J]. Systems Engineering and Electronics, 2021, 43(6): 1547-1556. |

| [15] | Bin ZENG, Lu YAO, Houpu LI. Research on optimal scheduling of antisubmarine helicopter in alert sea area [J]. Systems Engineering and Electronics, 2021, 43(6): 1586-1595. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||